May 2025, Qwen3 release, OpenAI bet on Windsurf's code editor

The AI landscape continues to evolve rapidly with two major developments this month: the release of Qwen3 and OpenAI’s strategic acquisition of Windsurf. These developments highlight the intensifying competition in both the general AI and coding assistant markets.

Qwen3: A New Contender in the LLM Arena

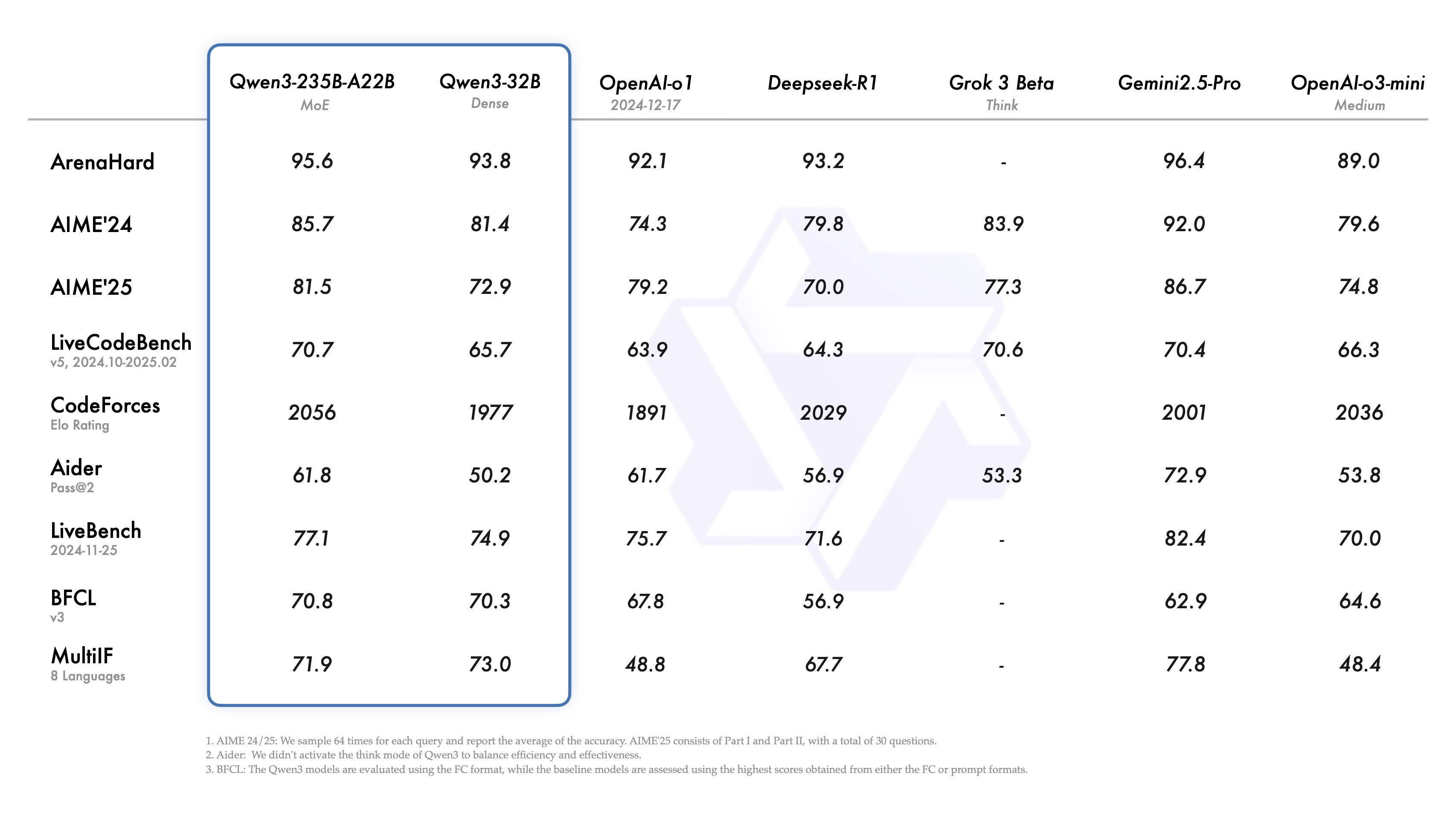

The Qwen team has announced the release of Qwen3, marking a significant advancement in their large language model family. The flagship model, Qwen3-235B-A22B, demonstrates competitive performance across various benchmarks, including coding, mathematics, and general capabilities. It stands toe-to-toe with other leading models like DeepSeek-R1, o1, o3-mini, Grok-3, and Gemini-2.5-Pro.

What makes Qwen3 particularly interesting is its efficient architecture. The smaller MoE model, Qwen3-30B-A3B, outperforms QwQ-32B while using only 10% of the activated parameters. Even more impressive is the tiny Qwen3-4B model, which rivals the performance of Qwen2.5-72B-Instruct, demonstrating remarkable efficiency in parameter usage.

Inference Performance

Recent benchmarks show impressive inference speeds for Qwen3-235B when running on high-end hardware:

Current LLM Leaderboard

Here’s a snapshot of the current top 10 models in the LLM Arena leaderboard:

OpenAI’s Strategic Move: Acquiring Windsurf

In a significant market development, OpenAI is reportedly in talks to acquire Windsurf (formerly known as Codeium) for approximately $3 billion. This potential acquisition represents OpenAI’s largest deal to date and signals a major push into the AI-powered coding assistant market.

Windsurf, founded in 2021 by Varun Mohan and Douglas Chen, has established itself as a key player in the AI coding space with:

- Over 800,000 developer users

- 1,000 enterprise clients

- A model-agnostic design allowing flexibility in LLM choice

The acquisition comes at a time when the AI coding assistant market is becoming increasingly competitive, with major players like Anthropic, Google, and Microsoft’s GitHub already offering sophisticated tools.

Market Response and Competition

Google has responded to this development by releasing the Gemini 2.5 Pro Preview (I/O version), which shows significant improvements in coding and web application development capabilities. The model has reportedly surpassed Claude in the WebDev Arena rankings with a 147-point score increase.

This rapid development in the AI coding space highlights the growing importance of AI-assisted programming tools in modern software development. The competition is driving innovation and pushing the boundaries of what’s possible with AI-powered coding assistance.

Practical Comparison and Use Cases

Local Deployment Guide

For meetup members interested in running Qwen3 locally:

- Hardware Requirements

- Qwen3-4B: Minimum 16GB GPU RAM

- Qwen3-30B: Minimum 40GB GPU RAM

- Qwen3-235B: Requires multiple A100s

Technical Deep Dive: MoE Architecture

Qwen3’s Mixture of Experts (MoE) architecture represents a significant advancement in efficient model design:

- Sparse Activation: Only 10% of parameters are activated during inference

- Expert Routing: Dynamic selection of specialized sub-networks

- Memory Efficiency: Reduced memory footprint compared to dense models

Looking Ahead

These developments suggest that we’re entering a new phase in AI development, where:

- Model efficiency is becoming increasingly important

- Specialized tools for specific domains (like coding) are gaining prominence

- The market is consolidating as major players make strategic acquisitions

The next few months will likely see more developments in this space as companies race to provide the most effective AI-powered development tools.

OFF-TOPIC: 3D Scene Composition Demo

Source: Open Source A3D 3D Scene Composer & Character Poser